Bugs are an inevitable part of software development. We hate them for the unusual strain they put on our reasoning capacity. But sometimes they may be the most interesting problems we encounter in our daily jobs. “Why doesn’t it work?” is a sentence every developer has shouted at least once in disbelief.

Exploring unusual problems in my software engineering career

Przemysław Wiczołek

Software Engineer

.NET Developer with interests in React and DevOps, who tries to challenge the status quo. Passionate about microservices, cloud and all other ways to improve the development process. After work a fan of cinema, gym enthusiast, philosophy amateur and fantasy bookworm.

In this blog post, I will explore a few interesting bugs I have encountered in my journey as a software engineer. Each of them was very out-of-the-ordinary and was profoundly satisfying to investigate and solve.

1. Environment-specific test failures

Firstly, a few words about the process setup:

- We had an automated build pipeline that included test execution.

- We were using MS Dynamics CRM for application state management.

- We were not using Docker, deployments were based on a published package.

- We were using on-premise Azure DevOps, so the servers were managed by our team.

Now, before you start googling, the only thing you need to know about MS Dynamics is that it wrapped a database entity into an additional structure. It sort of looked like this:

{

"Id": "1554d2e3-4a4f-4481-9e78-61c2fa538138"

"Attributes": {

"CreatedOn": "...",

"ModifiedOn": "...",

}

// Other entity specific fields ...

}The framework would automatically update CreatedOn / ModifiedOn attributes when you worked with the entity.

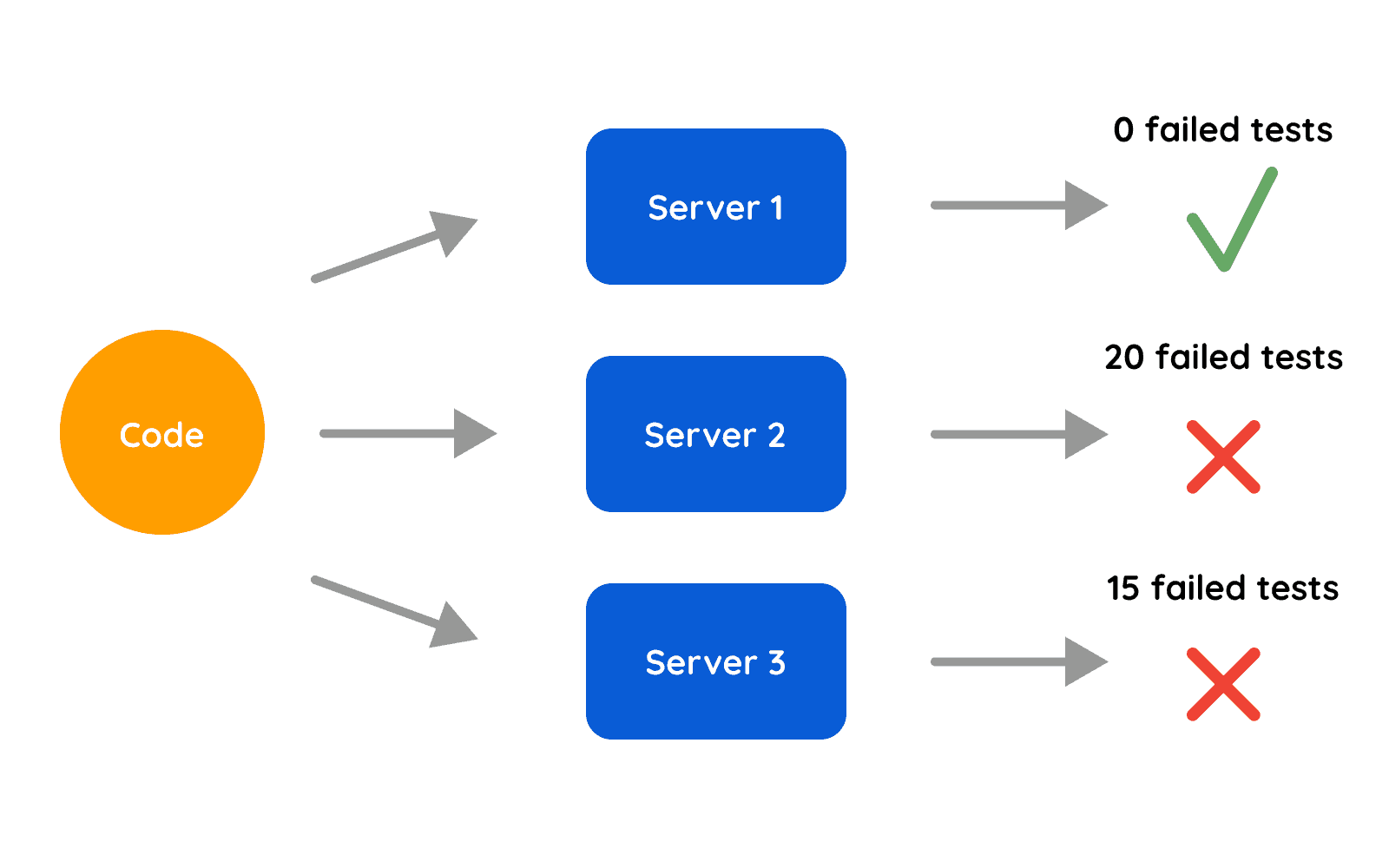

Our problem was that the unit tests were failing from time to time in the pipeline. To be specific, they always succeeded on a specific server but failed on others quite often. The servers selected for the build were chosen from the pool, so if you didn’t have the luck, you could have run the build multiple times, and it would fail a few times at no fault of your own. Not only was it annoying, but it slowed us down because we had to investigate whether these specific failures were transient or caused by a recent code change.

Another quirky thing was that the number of failing tests was not consistent. Sometimes we would get 15, and at other times 20. The tests also worked just fine locally.

At some point, I finally got to investigate it. The first thing I checked was, of course, what exactly was failing in the tests. Some of the tests were checking “CreatedOn” or “ModifiedOn” date after executing a piece of business logic to make sure a particular entity was created or modified. And these were actually the assertions that were sometimes failing.

Now, we weren’t using actual MS Dynamics in unit tests, but a testing library: Fake Xrm Easy. Fortunately, the library is open source, so I could dive into the external code to see how the attribute updates are handled. It looked pretty straightforward:

// ...

// this would update the underlying attribute

entity["modifiedon"] = DateTime.UtcNow;

// other updates ... So why isn’t it working? To confirm that the line was actually hit in the failing tests, I compiled the library with debugging symbols and then used it locally during test execution.

And indeed, the line was hit, everything seemed fine. The assertions should see that the date was updated after the logic was executed. And they did locally, but not in the pipeline!

In this specific case, I was fortunate to have direct access to build servers via remote desktop. The servers didn’t have any IDE, so I couldn’t simply debug it in the same way as in the local environment. But here I could just use simple Console.Log statements to see what was the state of the modified date after the testing library modified it and before the assertion itself. I have compiled the testing library with extra console logs and copied all the dlls necessary for testing execution to a build server.

I’ve finally got to test execution on the flaky server. And guess what? The modified date was the same before and after:

entity["modifiedon"] = DateTime.UtcNow; statement. An idea started forming in my mind. Why could it be that DateTime.UtcNow would return the same value twice? Certainly, the time was different, only by a few milliseconds, but still. And if you’re not from a C# background, DateTime object includes milliseconds and even tinier values. I started looking for some details about the precision of time resolution. And it turned out that it was actually possible to get the same value twice – the time resolution for DateTime.UtcNow varies from 0.5 to 15ms. So, it seems we’re there, we know why the tests were failing – the precision was too low to see that the value has changed between function calls. It also explained why the number of failing tests was inconsistent, if the logic took a few ms more due to server load, the tests would pass.

You may however recall that there was one server where the tests would always pass. And similarly, the tests were working locally. I couldn’t just let it go now, being so close to the full explanation, I had to know :D. I also felt that others wouldn’t be so sure about the validity of the answer if I didn’t provide full justification on why the tests were working from time to time. I dived deeper into time resolution documentation, and it turned out that it was highly dependent on the CPU - usually, the faster the CPU, the faster the time resolution.

You may have guessed by now that the specific server where the tests worked each time actually had a bit different hardware than the others. In particular, a much faster CPU. It was the same with our company laptops, they didn’t have problems with the tests since the resolution was good enough to work 99% of the time. So you couldn't easily detect it locally because even if the tests occasionally failed, they would probably succeed the second time you’ve run them. And the sheer act of debugging would also slow down the execution enough to make the tests pass.

After I had posted the explanation in the team chat, I received a “:O” reaction from nearly everyone in the team, which was a nice extra reward for all of the time I spent on this problem.

At this stage, the only remaining task was rather straightforward: fix the failing tests to work properly on each server. We used a special high-precision clock available in .NET, so after the test library modified the entity, we would override it with our high precision value. Problems were gone <3.

Lessons learned

- Use the same hardware for each of your servers if you are using on-premise or IaaS solutions. Problems related to different hardware will be especially painful to debug, because you will have to test your app on multiple types of hardware. These problems will often become transient and very difficult to reproduce.

- Avoid using time-based assertions in your tests. In our case, we could have avoided them by checking the change in the underlying value, but the logic was complex, and it was hard to determine. So sometimes it’s a necessary evil, but be cautious: Will the test pass on the first day of the month? On the last? On the first day of the year? On the 29th of February?

Don’t take debt from your future self or your colleagues.

2. Silent timeouts

Let's move on to a different project.

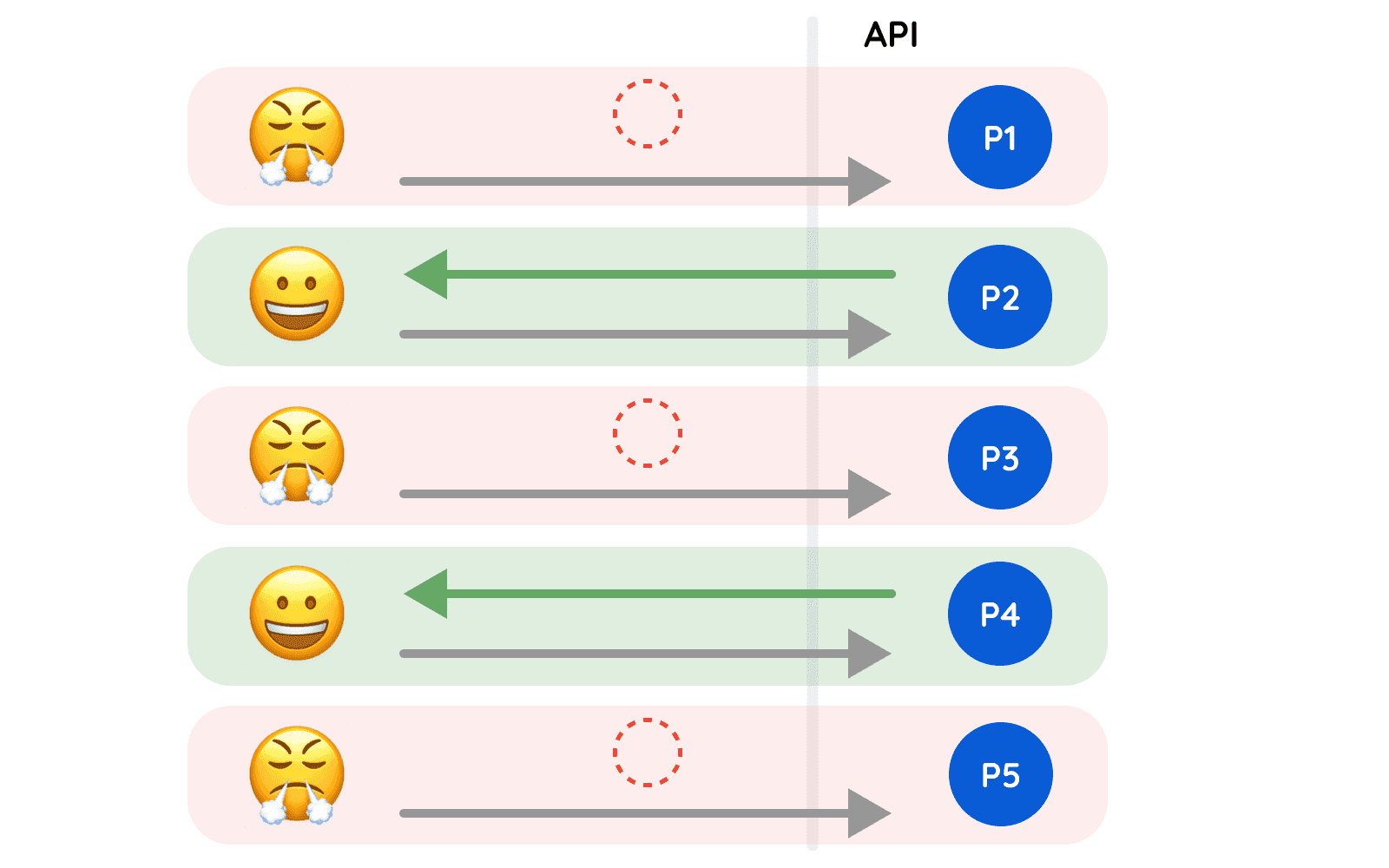

This problem started occurring the day after we released a new version of one of our APIs to the production environment. It seemed that the service was taking an unusually long time to respond to certain requests. There were numerous timeout errors in our logs. After a while, we identified that a single endpoint was causing the issues. Strangely, not all of the requests were failing; some seemed to pass through. Even with the exact same input, sometimes you'd get a timeout, while other times it executed without problems. You could imagine the problem like this:

The service was running on K8s. Eventually, we identified that only a subset of the service pods (aka mini-servers) was causing problems. The code and the docker image were the same everywhere, though. It was also running on the same hardware in a single cluster. We resorted to a standard initial solution - we redeployed the whole service, hoping it was a weird K8s quirk. And hurray! It started working again. For a while, at least... The problem seemed to return after some time. We checked the resource usage on the failing pods, but it seemed just fine – no CPU or memory usage spikes. Unfortunately we had also made some breaking changes to the db schema, so the rollback of the service wouldn’t be easy.

We didn’t see this issue before the deployment, which pointed to a code change. At this point we were already trying to reproduce the issue locally as well as in test environments and without much luck. We also didn’t have much logging in the particular area of code where the request was failing, so it was hard to tell where the root cause was. After reviewing the recent code changes for the service I’ve noticed that there were a few caching mechanisms added. Both for the whole app and per user. This led me to try to spam my local API with multiple simultaneous requests, simulating the normal cache usage. I was hoping to somehow narrow down the issue with extra logs.

I wanted to simulate a situation where multiple users hit the service with requests, but it was problematic to generate credentials for so many of them. I then modified the code so the user-level cache was invalidated each time. I also had a simple bash script at hand, which helped me send multiple requests at the same time.

And there it is! I managed to reproduce it. After a few minutes of spamming the service, some of the requests started timing out. Unfortunately, I couldn’t simply debug using an IDE, because hitting the breakpoints stops the whole service. At the beginning, the majority of the requests were passing, so it wasn’t simply a matter of setting the breakpoint further in the code. There was a huge amount of logs to investigate, but I slowly started to narrow down the area of the code where the issue seemed to be.

I finally noticed something unusual there, a semaphore, which set off some alarm bells in my mind. At first glance, it worked fine. The semaphore was released properly. The code looks roughly like this:

private string CACHE_KEY_FOO => "DATA_FOO"

private async Task<IList<DataFoo>> FetchDataFoo()

{

var dataCached = _memoryCache.Get<IList<DataFoo>>(CACHE_KEY_FOO);

if (dataCached != null)

{

return dataCached;

}

await _semaphore.WaitAsync();

dataCached = _memoryCache.Get<IList<DataFoo>>(CACHE_KEY_FOO);

if (dataCached != null)

{

return dataCached;

}

try

{

// simplified

var result = await FetchDataFoo();

_memoryCache.Set(CACHE_KEY_FOO, result, CacheExpiration.FiveMinutes());

return result;

}

finally

{

_semaphore.Release();

}

}So:

- If we have cached data, return it.

- Otherwise, call and wait until you can enter the semaphore. If we have cache data now, return it. Otherwise, fetch the data and fill the cache Finally (no matter what), release the semaphore.

You have probably already noticed the problem – the semaphore was not released in the 2a case. This wasn’t a user-level cache I mentioned earlier, but a global, per-service (or per-pod) cache. At first I still wasn’t sure what was the specific flow when the semaphore would block the whole service. If the data is in the cache, this shouldn’t matter, right? Because we just wouldn’t hit the semaphore. However, the next time the cache expires, we need to hit the semaphore to update the data. And here’s the problem: it’s blocked by a previous request which had hit the semaphore, but didn’t update the data and didn’t therefore release the semaphore. So all subsequent requests will also be blocked, there’s no data in the cache, so they all try to enter the semaphore. This problem would only occur when at the point of cache expiration, there would be 2 or more simultaneous requests which would try to update the cache. The issue would also affect all “first round” requests, which were waiting to update the cache but couldn’t enter the semaphore.

Once the issue was identified, it became relatively straightforward to correct. Unfortunately, it went through multiple testing phases undetected because the traffic generated by testers and developers was too low to reproduce it. This was the kind of issue most likely to be detected during code review, but it wasn’t caught this time.

Lessons learned

- Exercise special caution when writing and reviewing code that could impact the entire service. There's a high likelihood of either breaking it entirely (which is preferable) or only noticing the error after the next release (which is problematic).

- Bear in mind that having a standard suite of load tests may help not only in detecting performance issues, but also in measuring service stability and identifying bugs that can’t be easily detected through manual testing.

- Be very careful with thread synchronisation objects as improper usage can lead to deadlocks or race conditions, significantly impacting the stability and performance of the application.

3. How to play two videos at the same time?

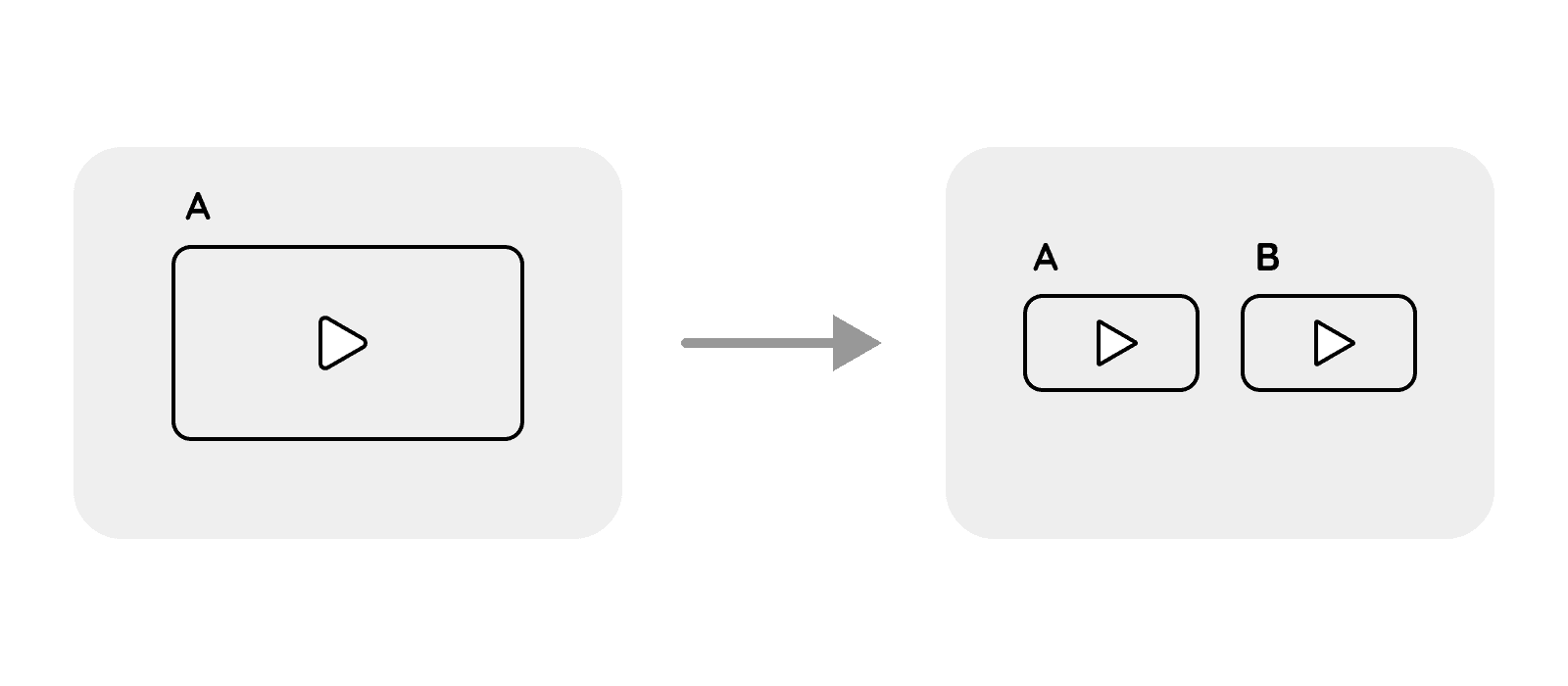

In this project we had an unusual requirement of loading the same video on the two subsequent pages. It looked like this:

On the second screen there were two videos and it was important to play them both at the same time. Both had roughly the same length. When you finished watching the first video, you were automatically redirected to the next screen. The website was hosted on Azure and was using Blob storage (cloud file storage) to store the videos.

Sounds rather simple, right? Not really. It was daaamn hard. Because the second video was a webcam recording of the user, generated during the first playback. At the same time, we also needed to send frames of the recording to the API to do some processing on them. The API then returned the data that would be displayed for each second of the playback on the next screen. We also had to temporarily store the recording in the browser storage, so the next page could access it.

One of the initial problems was that we had to capture individual frames of the camera recording. Sadly, to access them, you must have the entire video, it’s a structured format. Trying to access them during the recording just gives you a random binary file, not a frame. So we tried doing the processing at the end of the recording. Unfortunately, the processing time roughly corresponded to the video duration – 3 minutes for a 3-minute video. We couldn’t wait that long. We had to do this asynchronously, during the playback. This introduced extra complexity, because we also had to combine the results for the whole video at the end.

Ultimately, to capture frames during camera recording, we simultaneously recorded the user and generated a LOT of pictures, which acted as the frames. I was quite surprised that it actually worked. We used time as the frame filename send to API, enabling us to easily merge the results at the end. Even if some of the subsequent API calls failed, we could fill the gaps with empty data. This also addressed cases where later frames were processed faster than earlier ones, preventing misalignment in the data.

Now that we had the processing handled, we could finally display the results, which seemed rather simple compared to previous difficulties. My initial solution for this feature had been to just play both videos on the second screen, a moment after the user was redirected there. I didn’t expect any loading issues as the recording was stored locally and the first video was already played once on the previous page, so it would be cached by the browser.

After I deployed the feature to the production environment, it was noticed that the videos are not playing in sync. The video lagged behind the second one, even though it played without problems on the initial screen. The problem didn’t occur locally and, as an early stage startup, all we had was a single environment.

My initial thought was that there was a problem with throughput for the video access in Blob storage. At first, I began attempting to simulate the issue locally by using the production Blob storage instead of a simulated one. I could reproduce the issue then! So it was something with cloud storage, right?

I started investigating the speed of the connection, because the production Blob storage was placed in a region different to my physical location. There were some delays, not significant enough to cause any lags in the video loading. My second thought was that there was a problem with quick data access to Azure storage. For some reason, after the first video retrieval, it didn’t work as fast the second time. This didn’t make much sense, though, because if I played the video again on the same screen, it worked just as fine.

I began comparing the results of loading the video from the local Blob storage and the remote one. I checked what was displayed for the video in the browser's 'Network' tab. I’ve noticed the remote storage has been returning Cache-Control headers where my local storage wasn’t. Then, I recalled that for the sake of “better performance”, I set Cache-Control headers on the files in the production Blob storage. And this was the only difference. If the browser were using the cache, it should be even faster, not slower right?

Actually, not in this case. With my eyes wide open, I went through the flow 5 times, and each time I noticed a 10-20s delay in loading the video from the cache. Loading it the first time over an internet connection took roughly 5s. I was pretty confused about it. After a moment of googling, I found this stack overflow thread. It turned out it was a bug in the browser! In Chrome specifically. The flow worked fine on Firefox, but given that ~65% of users use Chrome (plus me ), we couldn't really ignore the issue.

Ultimately, after some internal fight, I decided to set the Cache-Control headers on these files to “no-cache”. The issue was gone, the video was loading without problems directly from the Blob storage. I felt a bit defeated this time because this was a workaround rather than an actual fix. However, after everything I went through with JavaScript to make two videos play simultaneously in a cross-browser compatible manner, I was relieved that it finally worked.

Lessons learned

- As of 2022, browser API for video processing and frame capture leaves much to be desired. There are some libraries, but they are hard to work with and may not function correctly across all browsers. I was unpleasantly surprised by the difficulty. Think twice about how you arrange such features because the browser may not simply support your flow.

- Sometimes, the most productive thing you can do is to workaround the bug. Spending too much time making it perfect will harm your project rather than help it.

- As the old saying goes, “Premature optimisation is the root of all evil.”

Summary

Each encountered problem was a unique, new challenge for me. It stretched my analytic abilities and software engineering expertise. These are the problems I remember many months after having the chance to investigate them. They definitely challenged my most basic assumptions, which is why they were sooo satisfying to resolve. At the end I always shout “I know! I know why it doesn’t work! I have to tell someone!!!!”. I’ll definitely stay attentive to such problems on a daily basis, even for the sheer joy of resolving them :)

Did you like my post? Did you hate it? Did you encounter similar challenges? Feel free to reach out to me on LinkedIn or drop me an email. I am curious to hear about them!